A single second of downtime can mean losing thousands of customers. Imagine an e-commerce site during a flash sale or a banking application on payday; massive traffic spikes can overwhelm servers and eventually crash them. This is the problem load balancing aims to solve.

For developers, system administrators, or business owners, understanding what load balancing is no longer an option but a necessity for building reliable and scalable applications.

This article will thoroughly discuss the concept of what a server load balancer is, ranging from how it works, its types, to simple architecture examples you can implement. Let’s get started.

What Is Load Balancing?

Simply put, load balancing is the process of distributing network or application traffic evenly across multiple servers behind it. Think of a load balancer as a clever traffic manager at the entrance of a highway with many toll gates. Instead of letting all cars pile up at one gate, this manager directs cars to less busy gates to ensure no long queues and everything runs smoothly.

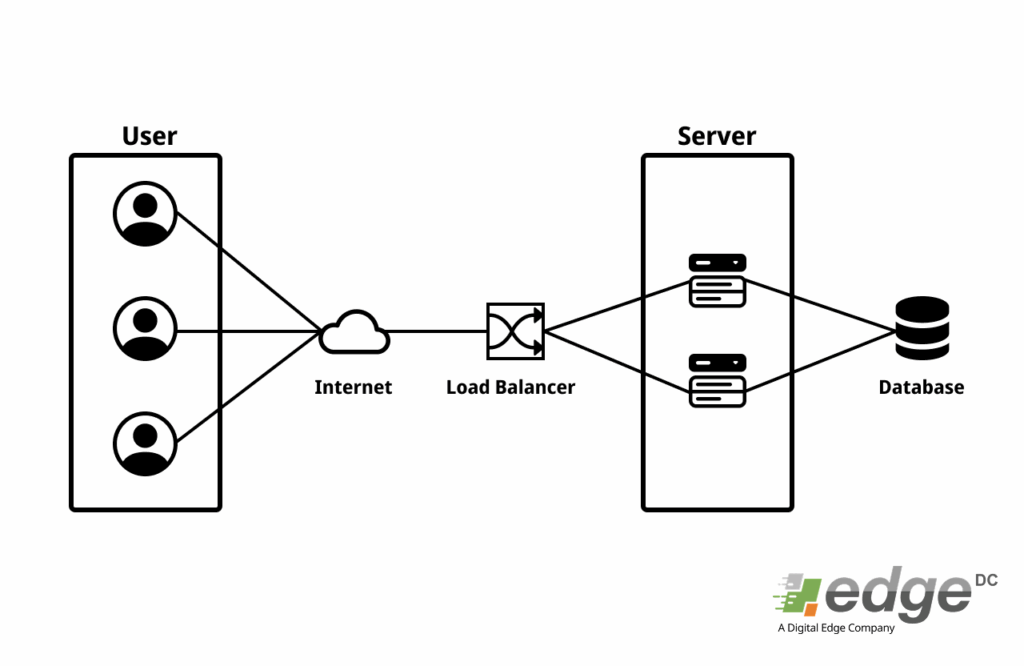

In the digital world, “cars” are requests from users (like opening a webpage or making a transaction), and “toll gates” are your servers. The load balancer sits between users and your server farm, acting as a single point of entry that then efficiently distributes the workload.

Why Is Load Balancing So Important?

Implementing load balancing provides three key advantages crucial for modern applications:

- Improved Performance and Speed: By dividing the workload, no single server is excessively burdened. This ensures every user request is handled quickly, reducing latency and improving the overall user experience.

- High Availability and Redundancy: If one of your servers fails (e.g., due to hardware issues or maintenance), the load balancer will automatically stop sending traffic to that server. Users will be redirected to other healthy servers without noticing any problem. This is the key to zero downtime.

- Flexible Scalability: As your business grows and traffic increases, you don’t need to replace old servers with more powerful ones (vertical scaling). Simply add new servers to the pool (horizontal scaling), and the load balancer will immediately include them in the traffic distribution rotation. This process is much more cost-effective and flexible.

Read also: Vertical vs Horizontal Scaling: Determining the Direction of Your Infrastructure Scalability

How Load Balancers Work: Layer 4 vs. Layer 7

Load balancers do not all work the same way. The main difference lies in the OSI Model layer at which they operate. The two most common types are Layer 4 and Layer 7.

Layer 4 Load Balancing (Transport Layer)

A Layer 4 load balancer operates at the network level. It makes routing decisions based on information from the transport layer, such as source/destination IP addresses and port numbers.

- How it Works: It forwards data packets from users to servers without inspecting the packet content. Because its “job” is simpler, the process is very fast.

- Advantages: Very fast and efficient.

- Disadvantages: Not “aware” of application content. It doesn’t know whether the request is for an image, video, or API data. All types of TCP/UDP traffic are treated the same.

Layer 7 Load Balancing (Application Layer)

This is a more sophisticated type of load balancer and is commonly used for web app load balancing. It operates at the application layer, meaning it can “read” and understand the content of requests, such as HTTP headers, cookies, and URLs.

- How it Works: Because it understands the context of the request, it can make much smarter decisions. For example, it can direct all requests for /video/* to servers optimized for streaming, while requests for /api/* are directed to application servers.

- Advantages: Very flexible, allows for complex routing, and is ideal for microservices architectures.

- Disadvantages: Slightly slower than Layer 4 because it has to inspect each packet, but the difference is often not significant for most applications.

Key Concepts in the Load Balancing Ecosystem

To maximize its functionality, a load balancer is supported by several important concepts:

- Health Checks: How does a load balancer know a server is “sick”? It periodically sends small requests (pings) to each server to check its status. If a server fails to respond multiple times, it will be marked as unhealthy and temporarily removed from rotation until it recovers.

- Failover: This is an automatic action that occurs when a health check fails. The load balancer will immediately redirect all traffic that was supposed to go to the failed server to another healthy server. This is the mechanism behind high availability.

- Session Persistence (Sticky Sessions): Imagine a user filling a shopping cart. Their first request is served by Server A. If their second request (e.g., adding another item) is redirected to Server B, their shopping cart might be empty because Server B doesn’t have their session information. Session persistence ensures that all requests from a single user during one session will always be directed to the same server, maintaining data consistency.

Commonly Used Load Balancer Tools

There are many load balancer software options, both open-source and commercial. Here are three of the most popular:

- Nginx: Originally known as a web server, Nginx also has very reliable and performant reverse proxy and load balancing capabilities. Its easy configuration makes it a favorite choice for many.

- HAProxy: This is a “specialist” load balancer and proxy. HAProxy is known for its very high performance, low resource consumption, and proven stability.

- Traefik: A modern load balancer designed for the cloud-native and microservices era. Traefik’s main advantage is its ability to automatically detect new services (e.g., newly launched Docker containers) and configure routing without manual intervention.

Simple Architecture Example for Small to Medium Scale

Let’s visualize how all of this works together in a simple architecture:

Conclusion

Load balancing is no longer a luxury but a fundamental component in designing robust, fast, and scalable application architectures. By intelligently distributing workloads, it not only maintains application performance at peak levels but also provides a crucial safety net to ensure your services remain operational even when problems occur on one of the servers.

Choosing the right type of load balancer (Layer 4 or Layer 7) and configuring features like health checks and session persistence will be key to the success of your digital infrastructure.